Communication Literacy Survey

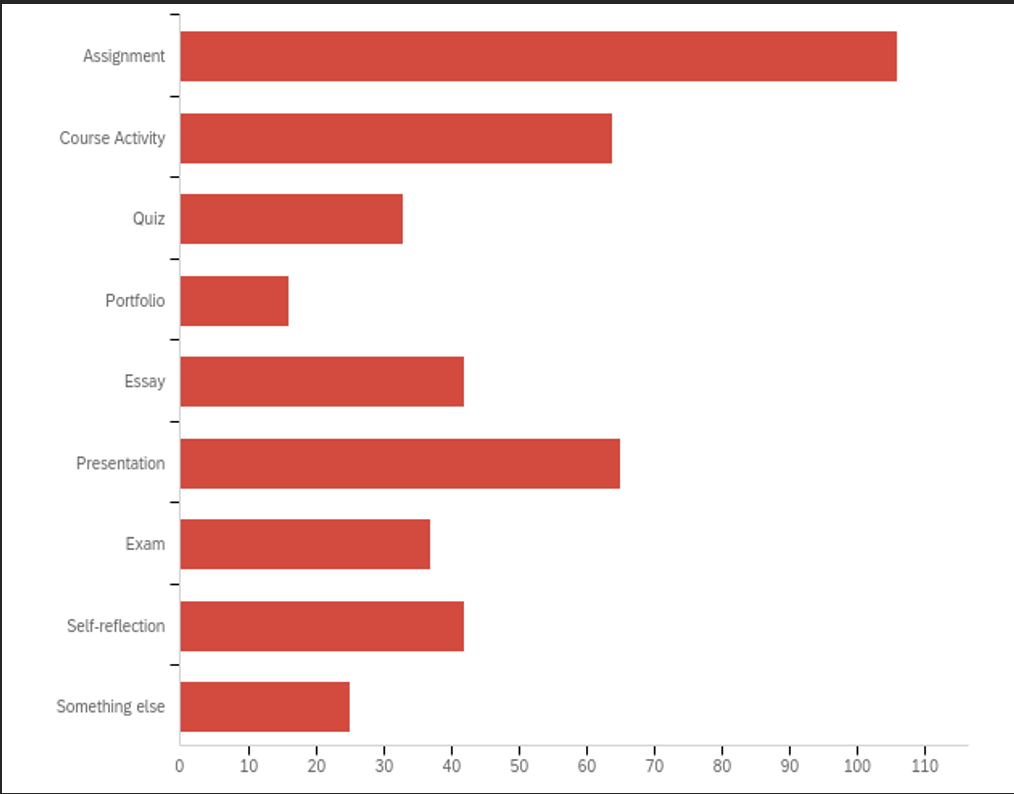

On September 15th, 2021, the Office of Planning and Assessment launched a new Communication Literacy (CL) survey through Qualtrics to learn more from faculty teaching CL courses. Specifically, we wanted to know what types of assessment tools they were using, why they used those tools, and if they had examples of those in their courses. Our office sent the survey to over 500 faculty members and provided two weeks for completion. We received 171 responses from most of the colleges at TTU, with 63 responses from the College of Arts and Sciences, 33 responses from the College of Media and Communication, and 13 responses from the CASNR. We asked faculty to identify the types of assessment tools they used to evaluate communication literacy in their courses. Based on the responses, most faculty members used an assignment (~25%), a presentation (~15%), and/or a course activity (~15%).

While our content analysis is ongoing to determine why faculty used these assessments and how they are used in each course, most of the responses indicated either document creation, group discussions, or group/individual presentations. Document creation was field specific, asking students to effectively communicate findings, analysis, visual messages, or other content. Document creation is closely associated with the previous "Writing Intensive" course label used before Communication Literacy was adopted. Discussions included group exercises, debates, data interpretation, and/or other dialogues between groups. Finally, group and individual presentations included research findings, laboratory/technical briefings, or content summaries from the course. Most assessment tools were used multiple times throughout the semester, but a few courses did use a one-time assessment (such as a final presentation, exam, etc.).

With our findings and information from the Communication Literacy survey, we can better understand the types of assessment that faculty are using and how to improve standardization of assessments across the university while still maintaining field specific practices that are most appropriate. Our office will use this pilot survey to inform future data collection efforts to best meet the needs of the faculty as they assess Communication Literacy courses.

Faculty Success Improvements

In 2021, Watermark changed the name of Digital Measures to Faculty Success. TTU is in the process of changing over documents and handouts. We will be updating our videos in the summer to reflect the new change. We will begin using the abbreviation FS to refer to the software. Thanks to everyone for your patience as we all incorporate this change.

Work Requests

One of the less commonly known procedures surrounding Faculty Success (FS) is how reports or screens are updated. While TTU owns the FS data and have full control over the environment, we rely on FS developers for updates to the user interface (UI), data imports with large datasets, and general consultation for errors and site use. When a change is requested, OPA considers the request and considers the functionality of the request as well as whether the request is possible. In some situations, the best solution is a simple one that can be put into place with little or no disruption to site use.

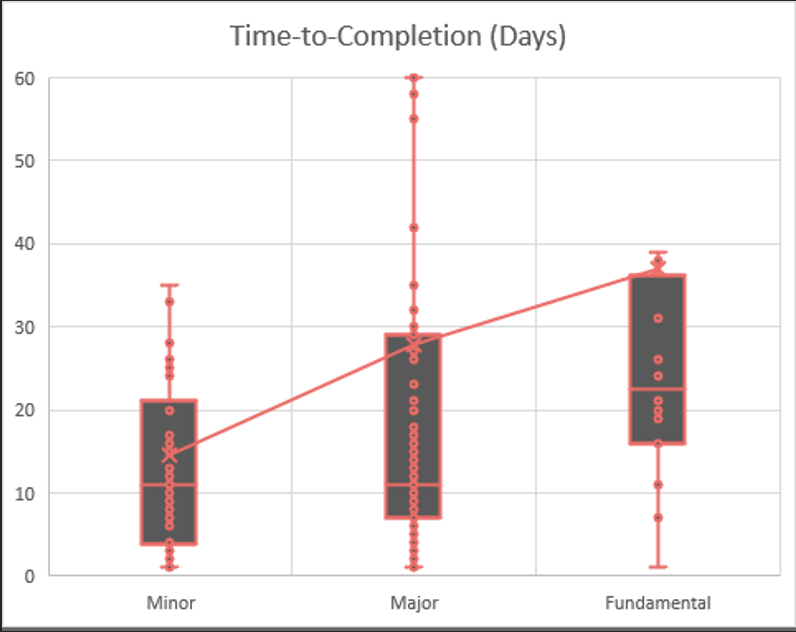

The below chart shows how OPA has handled work requests in the past year. Work requests were categorized by Minor, such as altering the wording of a metric or completing a data copy request, by Major, which would include data imports or adding the School for Veterinary Medicine to our various screens, and by Fundamental, which would include the Grad School Expansion Project-related requests or requests that update our query or create new screens in the system.

Over the past year, OPA handled about 145 requests, and we categorized them to be about: 37% Minor requests, 52% Major requests, and 11% Fundamental requests. We are pleased with this breakdown, though the larger Major category may need to be determined differently. Part of the issue with determining the category is that often requests' importance is unknown until we begin working with Watermark. This is not to be confused with the OPA support email, which handled around 400 troubleshooting requests last year. The OPA support email allows faculty to directly request troubleshooting, particularly for issues like being unable to log in or manual changes to data.

HB2504 Compliance

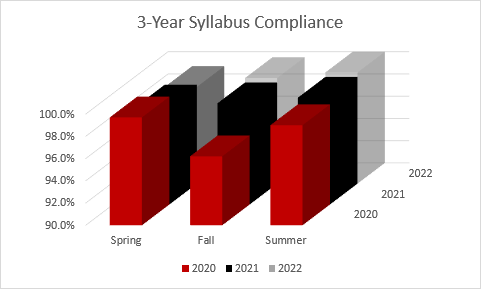

TTU kept with the consistently high syllabus compliance in the Spring of 2022. Compliance was right at 99% as OPA continues to work with departments on HB2504-related issues. This term there were some extenuating issues with the HB2504 query that required additional attention and required us to pause the import at times while we continue to work on the Graduate Student Expansion Project.

Other Faculty Success Improvements

Throughout the year, OPA must be reactionary towards some work request or troubleshooting issues. For example, during the Spring term, many requests come in for updates to Annual Faculty Report templates or to other screens in FS that need a change. During the Fall we often receive more questions about evaluations and general records compliance. OPA is always willing to hear and make changes when possible and beneficial to do so. In the past year, some of the changes to FS include:

- Development of a Comprehensive Performance Evaluation form in Faculty Success

- Preliminary creation of a Tenure Dossier, which will allow for a single report to be run for P&T procedures

- Graduate School Expansion Project and development of an Individual Development Plan report to allow tracking of Gad student research, accolades, and progress

- Continuous improvements to the data query which populates FS, this includes enrollment values updating consistently, faculty/staff rank, and the new inclusion of a Student College and Department, which will allow faculty and staff to list their studies as well as to help categorize our students when they are added to the system.

- Many other changes have been made over the past year, but these all represent changes that will affect users across the system. It is our goal to continue making the system easier to use with the assistance of faculty and administration.

Please join us for the annual Texas Association for Higher Education Assessment conference on the Riverwalk in San Antonio! The proposal submission site is open through April 15. We'd love to have TTU faculty representation in San Antonio. For more information about the conference, visit https://txahea.org/.

Highlights from the Support Services Institutional Effectiveness Committees

As part of Texas Tech's SACSCOC accreditation, OPA meets bi-annually with the Support Services Institutional Effectiveness (SSIE) Committee which is tasked with the direction and scope of our assessment process as it pertains to Administrative, Academic, and Student Support Services. The Committee serves in an advisory capacity to guide OPA in effective and representative assessment that aligns with the needs of both the university and SACSCOC. We are grateful to the following representatives for serving on the Support Services IE Committee:

|

|

The SSIE Committee met in January 2022 where they began the peer review process of the 2020-2021 Administrative, Academic, and Student Support Services assessment reports, providing valuable feedback to our departments. All members participated in a simple training session on how to use the Support Services Assessment Rubric. Committee members completed approximately 7-8 reflections on their peer Support Service departments.

During this meeting, Kara Page updated the Committee about the changes that had been made in the annual assessment report. These changes primarily reflect a more business-centered terminology, which better represents the processes and practices in our Support Service departments. The information about these changes was well-received and there is consensus that the new format will help the departments more accurately demonstrate their impact on students and the university.

The University-Level Institutional Effectiveness Committee (ULIEC), which has historically been comprised of College representatives, has also expanded to include representatives from our Support Services units. It is OPA's goal to assess the student experience across the entire university and not simply within the higher profile units. To ensure we have complete department representation, OPA has expanded its assessment review process to include the following programs as part of the Administrative, Academic, and Student Support Services:

Athletics

Division of Diversity, Equity, and Inclusion

International Affairs

Risk Intervention and Safety Education

Title IX

eLearning and Academic Partnerships

Institutional Advancement

Institutional Research

Office of Research & Innovation

Office of the Provost

Payroll and Tax

Safety and Security

Student Financial Aid & Scholarship

University Outreach and Engagement

President's Office

Provost's Office

Texas Tech Alumni Association

It is worth noting we have updated the name for this group of people from Non-Academic representatives to Support Service representatives. We feel as though the term Support Service better represents the wide-variety of necessary work that they do.

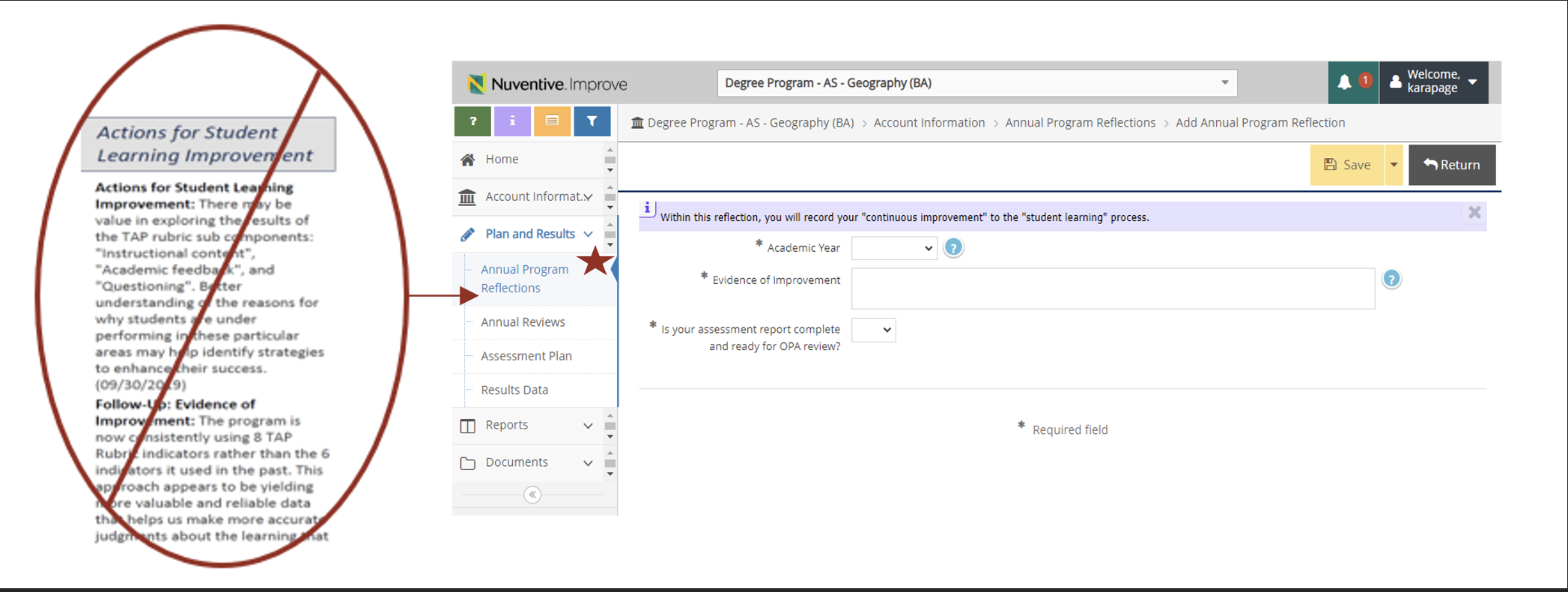

Changes to Nuventive Improve Reporting

It is OPA's goal to continuously improve, and thus, we are happy to announce that our annual academic reporting process has been updated based on faculty feedback. We want programs to be able to portray their successes in updating or improving the student learning process. Rather than reporting multiple actions for improvement and subsequent follow-up statements, Program Coordinators will report one narrative discussing actions taken to improve or a plan for improvement of student learning. The reflection section now takes a holistic view of degree program improvement as opposed to solely assessment method improvement. This broader scope allows programs to showcase curriculum or course sequence changes, faculty training, or any community engagement that may influence the students' opportunity to master the program's specific outcomes.

Here is a snapshot of our changes:

To assist Program Coordinators in navigating these changes, one can view any of these instructional documents or visit our page on OPA website:

Annual Reflections

Analysis of Results

OPA Website Assessment Page

OPA Assessment Spotlight

Auxiliary Services

Patrick Albritton

By: Kara Page, Senior Administrator

What is your position and what do you do for Texas Tech?

I am the Assistant Vice President of Auxiliary Services, a non-academic division of

Administration & Finance that extends beyond the learning environment of classrooms.

We are the golden thread that enhances the Red Raider quality of education through

student experiences and services. We are a central office home to the following service

departments: Hospitality Services, University Student Housing, Student Union & Activities,

University Recreation (URec), Student Health Services and the TTU Campus Store (Aux

Svs). Our mission is to attract and retain a diverse community that provides the essential

programs and services required for every Red Raider to achieve their highest potential.

We strive to continually enhance campus life for every Red Raider.

How long have you been at Texas Tech?

I have been with Texas Tech for 6 months. After graduation, I spent 25 years in the

Air Force before returning to my alma mater.

How did you get involved with assessment?

As an engineer and retired military officer, feedback and assessments are critical

in understanding how a system or organization is functioning. Upon starting my new

position at Texas Tech, my team and I developed Auxiliary Services' strategic goals,

theme, mission and vision to align with and support Texas Tech University's Pathway

to 2025 Strategic Plan. To capture and assess our progress, from divisional goals

to individual growth and productivity, we decided to line up our annual assessments

using the Office of Planning and Assessment's Nuventive Improve with yearly evaluations,

and we adjusted our input timing to accommodate the evaluation's schedule. We developed

our annual report to highlight the impact of the services we provide and how those

services lead to a better student experience. In our annual report, we introduced

the “Golden Thread” to illustrate how Auxiliary Services weaves a thread of excellence

to increase student success. Using the Nuventive tool allowed us to standardize departmental

inputs and get on an existing, repeatable cycle. Auxiliary Services assesses programs,

services, facilities and teams from aspects of financial stewardship, customer needs

and enrollment impacts to Texas Tech University.

How do you use assessment in your job? What are some interesting assessment techniques

you have used or are planning to use?

Texas Tech Auxiliary Services has partnered with Rawls College of Business' Center

for Sales & Customer Relationship Excellence to develop a strategy to enhance student

enjoyment and recognize which aspects of Auxiliary Services have the greatest impact

on our stakeholders. Utilizing the Sales & Customer Relationship Strategy Competition,

we look to uncover which factors inhibit enjoyment and what activities are the biggest

areas of complaint for our students. Knowing this information will allow us to establish

opportunities to improve our Housing, Hospitality and URec programs and services.

Is there anything else you would like to share about assessment?

Assessment is critical in the execution of my job. I am a student of the OODA loop,

a decision-making process developed by U.S. Air Force Colonel John Boyd. The OODA

cycle, observe-orient-decide-act, requires the system to be assessed through examination

and compared back to goals prior to implementation of decisions. This is very similar

to Dr. Deming's Plan, Do, Study, Act cycle of continual improvement. This is exactly

what the annual assessment forces the Auxiliary Services' leadership team to do –

evaluate the effectiveness of previous decisions before we align next year's actions.

What is your hometown or where do you tell people you are from?

I grew up in Austin, Texas.

What do you like to do when you are not working?

Spend time with my family – it doesn't matter what we are doing.

What is something you have not done but would like to do?

I have landed in Shannon, Ireland, several times returning from overseas deployments,

but I have never had the chance to set foot outside of the airport. I hope to one

day take a tour of Ireland.

Long-Term Reaffirmation Planning

The Office of Planning & Assessment is currently shifting its priorities to long-term reaffirmation planning. The Compliance Certification Report (CCR) will be due in September 2024, likely around the Labor Day weekend. As we prepare for this voluminous report, we are creating back-end file saving procedures using Microsoft Teams. We expect to conduct most of our work in Microsoft Teams, and we have already created a SACSCOC Reaffirmation of Accreditation Teams site. During Spring 2022, we hope to have the entire site complete with historical documents and guidelines from the SACSCOC Resource Manual. Below is a more specific set of deadlines.

- September 2022 – Assemble writing teams, announce writing resources to teams, University-level Institutional Effectiveness Committee to begin exploring QEP topics

- Fall 2022 – QEP Topic Development Committee explores recommendations provided by University-level Institutional Effectiveness Committee, QEP Topic finalized by Thanksgiving 2022, QEP Director position posted by December 2022 and hopefully hired by March 2023

- January 2023 – CCR first drafts due to OPA

- March 2023 – QEP Director hired, QEP Director assembles faculty/staff/student/alumni/community steering committee to draft the QEP proposal

- January 2024 – CCR second drafts due to OPA (editing and refinement to occur throughout January 2024 – August 2024)

- Fall 2024 – Preliminary/Pilot QEP is launched

- September 2024 – Compliance Certification Due to SACSCOC

- January 1, 2025 - QEP Proposal (due to SACSCOC 4-6 weeks before on-site visit)

- Spring 2025 – On-site Visit (likely in February/March 2025)

Office of Planning and Assessment

-

Address

Administration Building, Suite 237, Box 45070 Lubbock, TX 79409-5070 -

Phone

806.742.1505 -

Email

opa.support@ttu.edu