AI Teaching Resources

Generative AI is reshaping teaching, learning, assessment, research, and creativity. At Texas Tech University, faculty are engaging with this technology in diverse and evolving ways.

This site draws from existing university policy, national guidance, and evolving best practices to help you make decisions about whether you might use AI in ways that encourage innovation while protecting academic integrity, student data, and ethical standards. We anticipate that this page will grow alongside the technology, with your professional judgment and student learning at its core.

If you would like to talk about teaching with artificial intelligence and your concerns or ideas, please feel free to contact Lisa Low, Artificial Intelligence Fellow, or any member of the TLPDC team at 806-742-0133.

Syllabus Statements | Ethical Considerations | Academic Integrity | Data Protection | FAQ | Background Information

Syllabus Statements

How to add a syllabus in RaiderCanvas

Faculty are encouraged to include a clear statement in their syllabus regarding the permitted or prohibited use of generative AI. The following examples offer baseline language you may adapt to fit your course objectives and pedagogical values. Each version outlines student expectations and reinforces the importance of academic integrity.

Note that the statement specific to AI use on tests, quizzes, and exams could be included directly on test instructions and in course syllabi. The statement disclosing faculty use of AI is meant to model behaviors that we expect from our students and offer transparency about faculty use of AI in teaching.

Generative AI Use is NOT Permitted in This Course

All work in this course must be completed without the use of any artificial intelligence tools, including but not limited to ChatGPT, Claude, Gemini, DALL·E, Grammarly, or similar applications. Students are expected to apply what they are learning in this course to produce work for this class — drawing on their own developing knowledge, understanding, and skills. AI is not permitted at any stage of the assignment process. Doing so may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. Please contact me if you have questions regarding this course policy.

Generative AI Use is Encouraged and Allowed in this course

You are welcome to use generative AI applications (e.g., ChatGPT, Claude, Gemini, DALL·E, Grammarly, or similar applications) in this course, provided that your use aligns with course learning objectives and is properly documented according to instructions in this syllabus. You are responsible for ensuring that AI-generated content is properly cited, accurate, ethical, free of misinformation or intellectual property violations. AI-generated content must never be submitted as your own work. Doing so may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. Please contact me if you have questions regarding this course policy.

Any generative AI use should be cited AND clearly disclosed according to the instructions in this syllabus. An example of an APA citation (7th ed. format) is seen here: OpenAI. (2025). ChatGPT (May 2024 version) [Large language model]. https://chat.openai.com

Generative AI Use is Allowed Only for Specific Assignments in this Course

Generative AI applications (e.g., ChatGPT, Claude, Gemini, DALL·E, Grammarly, or similar applications) may only be used in this course for specific assignments where the instructor explicitly permits their use. Unless otherwise stated in the syllabus or assignment guidelines, AI assistance is not allowed. Using AI in unauthorized contexts or submitting AI-generated content as your own work may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. When using AI in permitted contexts as outlined in the syllabus or explicitly permitted by the instructor, you are responsible for ensuring that AI-generated content is properly cited, accurate, ethical, free of misinformation or intellectual property violations. AI-generated content must never be submitted as your own work. Doing so may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. Please contact me if you have questions regarding this course policy.

Any generative AI use should be cited AND clearly disclosed according to the instructions in this syllabus. An example of an APA citation (7th ed. format) is seen here: OpenAI. (2025). ChatGPT (May 2024 version) [Large language model]. https://chat.openai.com

In this Course, Generative AI Use Is Permitted for Assistance, Not Authorship:

Artificial intelligence applications, including but not limited to ChatGPT, Claude, Gemini, DALL·E, Grammarly, or similar applications, may be used to assist with specific tasks such as submitting a first draft to a generative AI application to assist with refining or evaluating original student-produced work, such as grammar or sentence structure assistance. Students are expected to apply what they are learning in this course to author work for this class—drawing on their own developing knowledge, understanding, and skills. Using generative AI as a collaborator means that a student is not simply copying or submitting AI-generated work but is actively engaging with the AI tool as part of the creative or problem-solving process. Transactional use—uploading assignment instructions and then submitting the AI-generated result as your own—is prohibited and may constitute a violation of academic integrity which may be referred to the Office of Student Conduct. When using AI in permitted contexts as outlined in the syllabus or explicitly permitted by the instructor, you are responsible for ensuring that AI-generated content is properly cited, accurate, ethical, free of misinformation or intellectual property violations. AI-generated content must never be submitted as your own work. Doing so may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. Please contact me if you have questions regarding this course policy.

Any generative AI use should be cited AND clearly disclosed according to the instructions in this syllabus. An example of an APA citation (7th ed. format) is seen here: OpenAI. (2025). ChatGPT (May 2024 version) [Large language model]. https://chat.openai.com

In This Course, Generative AI Use is Permitted For Planning Purposes, Not Authorship

The use of artificial intelligence applications, including but not limited to ChatGPT, Claude, Gemini, DALL·E, Grammarly, or similar applications may be used for planning activities such as brainstorming, outlining, and idea development. However, all final submissions should show evidence that students have developed and refined these ideas on their own without additional generative AI use. Students are expected to apply what they are learning in this course to produce work for this class by drawing on their own developing knowledge, understanding, and skills. When using generative AI in permitted contexts as outlined in the syllabus or explicitly permitted by the instructor, you are responsible for ensuring that AI-generated content is properly cited, accurate, ethical, free of misinformation or intellectual property violations. AI-generated content must never be submitted as your own work. Doing so may constitute a violation of academic integrity and may be referred to the Office of Student Conduct. Please contact me if you have questions regarding this course policy.

Any generative AI use should be cited AND clearly disclosed according to the instructions in this syllabus. An example of an APA citation (7th ed. format) is seen here: OpenAI. (2025). ChatGPT (May 2024 version) [Large language model]. https://chat.openai.com

Generative AI on Tests, Quizzes and Exams

To promote deep learning and critical thinking, students are expected to complete reading quizzes, tests, exams, etc. without the use of generative AI tools (such as ChatGPT, Claude, Gemini, Grammarly, or similar platforms). Relying on AI to answer these questions may limit your understanding of the material and may undermine your long-term academic and professional success. Any unauthorized use of AI, or collaboration and sharing of answers with others may be referred to the Office of Student Conduct.

Instructor Use of Generative AI

I integrate generative AI into my teaching to enhance—not replace—human learning, creativity, and critical thinking. I use AI applications as collaborative partners for ideation, drafting, feedback, and refinement of teaching materials, but I maintain full responsibility for content and accuracy. I do not use this technology to assess student work. I do not input other people’s work or personally identifiable information into AI tools. My approach emphasizes ethical, transparent use aligned with academic integrity, equity, and the development of transferable skills. I also aim to model responsible engagement with emerging technologies, emphasizing thoughtful boundaries rather than rigid prohibitions. I strive for transparency around AI use by adding AI disclosure statements and/or citation to all work generated for this course in collaboration with AI tools.

Ethical Considerations

Potential limitations of AI tools

Faculty should be aware of limitations when considering AI tools and risks related to content, systemic and design issues, student learning and privacy or legal considerations.

Content-related Risks:

- Misinformation and inaccuracies in AI-generated content that are presented by the AI as ‘truth’ but are factually incorrect (often referred to as “"AI hallucinations”)

- Bias and unintentional harm stemming from the training data or model design, leading to stereotypical, exclusionary, or culturally insensitive outputs

- Inappropriate or offensive content that may still be produced despite content filters

Systemic and Design Risks:

- Algorithmic implications, including the potential for AI to reinforce harmful patterns, stifle innovation, make flawed inferences, or reflect opaque decision-making processes

- Adverse environmental impacts related to the energy consumption and carbon footprint of training and running large AI models

Student Learning Risks:

- Over-reliance on AI that may diminish critical thinking, writing fluency, skill development or loss of personal voice

- Equity issues may arise for students without consistent access to AI or paid subscriptions to AI applications

Privacy and Legal Risks:

- Data privacy and security risks if sensitive, proprietary, or personally identifiable information is entered into AI systems

- Intellectual property concerns when AI-generated content overlaps with copyrighted material or lacks clear ownership

The following examples illustrate some difference uses of generative AI.

How Can a Faculty Member Use AI for Teaching?

|

Correct Use |

Incorrect Use |

|

Example 1: Course Redesign A professor inputs an existing course syllabus, learning objectives, and general assignment descriptions into an AI tool. The tool provides ideas for restructuring the course, sequencing topics differently, or incorporating new active learning strategies that align with the course goals. |

A professor uploads detailed internal planning notes that include unreleased exam questions, proprietary teaching materials from another institution, or unpublished research examples. |

|

Example 2: Assignment Development A faculty member shares a publicly available disciplinary competency framework with an AI tool. The AI generates assignment prompts targeting specific competencies, helping to ensure alignment between assignments and learning outcomes. |

A professor uploads unredacted student submissions from previous semesters, including names, IDs, or writing that could identify the student. |

|

Example 3: Content Creation An instructor uses AI to develop explanatory materials about complex concepts. By referencing open-access textbooks or academic sources, the tool helps create simplified summaries or diagrams that support student understanding. |

A professor uploads full scans of a paywalled textbook, copyrighted images, or subscription-only journal articles. |

|

Example 4: Assessment Planning A professor enters general grading criteria and learning outcomes into an AI tool. The tool assists in creating a rubric template and proposes a variety of assessment formats (i.e., reflective journals, case studies, presentations) tailored to these outcomes. |

A professor uploads a bank of multiple-choice questions from a licensed test-prep resource to “refresh” them. |

|

Example 5: Pedagogical Innovation A faculty member asks an AI tool to suggest evidence-based teaching strategies specific to their discipline. |

A professor uploads subscription-only teaching guides or toolkits from a professional association. |

Ethical Implications of Grading with Generative AI

A longstanding responsibility for faculty is the role of assessor. Traditionally, we use our disciplinary expertise to assess a student’s learning of course material.

There are significant ethical considerations regarding the use of AI tools with grading, including implications for the role of faculty, public perception of a higher education degree, trust issues between students and faculty, and the value we place on feedback, assessment and transparency. The Office of Student Conduct reports an increase in questions from students about whether their personally identifiable information has been uploaded to AI detection software.

If a faculty member chooses to grade with AI, considerations should include differentiating the AI feedback from human feedback with disclosure statements, extending the AI feedback with the instructor’s critique or encouragement, or pointing out potential flaws with AI feedback. AI feedback seems most appropriate for low-stakes assignments or as a part of ungraded feedback to allow students to refine and draft their work.

We acknowledge that careful consideration should be given to the implications of AI grading. At a minimum, faculty should disclose any AI grading practices with students to model expected behavior in terms of transparent disclosure of AI use.

Cautions about Generative AI Tools and Data Privacy

As interest in generative AI tools continues to grow, it is important to proceed with awareness and caution. Currently, Texas Tech does not hold institutional agreements with generative AI providers. As a result, no university-level data protections are in place for faculty or students using these tools. Best practices suggest that you use discretion when entering any academic or research-related content and consider maintaining an “AI-use ledger” for institutional due-diligence to record the tool’s name, version, date, and type of content you are entering.

- Some AI tools may mine or retain the data you input, including sensitive or proprietary information. Always assume that any input into an AI tool – unless explicitly protected by a contract – is now public.

- No institutional safeguards currently exist to protect university users or data when using these tools.

- Use of AI platforms is at your own risk, especially when it comes to academic, research, or student-related materials.

- Current information security policies still apply and can be found at IT Security Policies.

While Microsoft Copilot is available through TTU single sign-on with your eRaider credentials. This sign-on addresses TTU authentication policies but does not mean it is officially supported or protected by a university-wide agreement. There is no guarantee that data privacy standards align with institutional expectations. Be aware that:

- Copilot may access and analyze content across your Microsoft 365 account, including Outlook, OneDrive, Teams, and more. Some users feel that this allows for personalized assistance and automation of your repetitive tasks, while others are uncomfortable with this level of access to personal data.

- Your data could be used to train or refine AI models, in accordance with Microsoft’s terms of service.

Academic Integrity

Instructors who incorporate generative AI should adopt a developmental, co-learning approach—supporting student growth while reinforcing ethical and academic standards. Faculty are encouraged to model responsible use themselves and reinforce that AI is a tool—not a substitute—for critical thinking, academic effort, and ethical learning.

According to Texas Tech’s guidance on academic misconduct and AI, instructors are responsible for clearly defining the appropriate use of AI in their courses and helping students understand the ethical boundaries of its use. This could include:

- Using the Texas Tech recommended AI syllabus statements and/or developing a more course-specific AI policy outlined in the syllabus, including the instructor’s requirements for preferred citation style and/or disclosure statement;

- Embedding clear AI usage guidelines in assignment instructions and nudging students toward reflective, responsible use;

- Redesigning assessments to function well in an AI-enabled environment, including rubrics that account for and evaluate AI-supported work or suspected AI misrepresentation of content;

- Teaching students how to use generative AI with appropriate considerations for privacy, intellectual property (IP), proprietary information (PI), and personally identifiable information (PII); and

- Guiding students to critically evaluate AI-generated content for bias, accuracy, and ethical concerns.

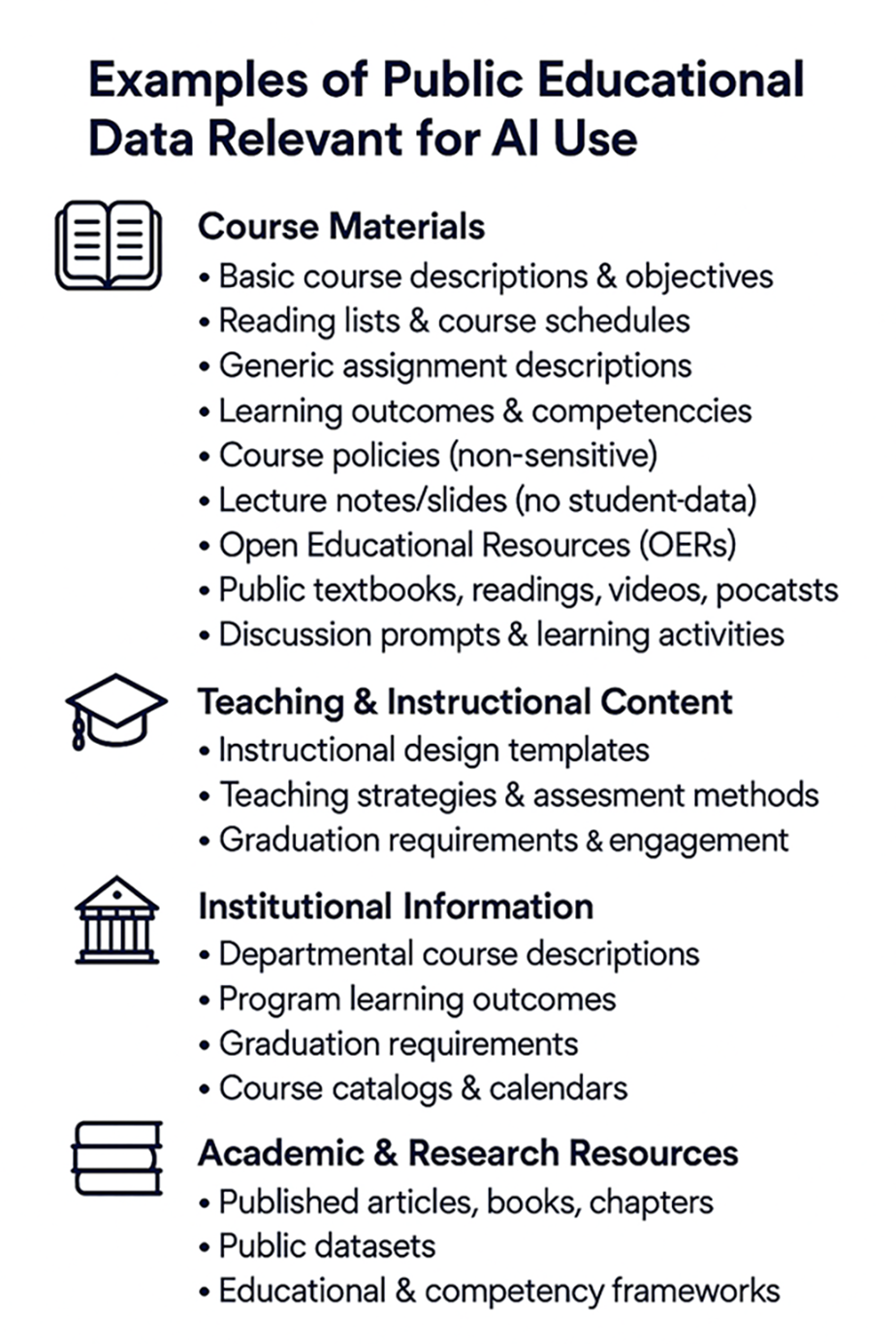

Data Protection

While some types of materials and data may be appropriate for use with generative AI tools, faculty should exercise professional judgment and carefully consider privacy, intellectual property (IP), proprietary information (PI), and personally identifiable information (PII) before using any AI tool. As such, any use of these tools should be approached with caution and in alignment with university values and ethical standards.

Private and Sensitive Data — Not Appropriate for Use with AI Platforms

Faculty should never input the following types of information into AI tools, even when using university-supported platforms:

- Personally Identifiable Information (PII) (e.g., names, eRaider, R-numbers, addresses, student records)

- Proprietary Information (PI) (e.g., internal operations, unpublished data)

- Intellectual Property (IP) not owned by the user or used without permission

- Institutional Data not already made public

- Confidential Data, including:

- FERPA-protected student records

- Unpublished research (without express consent)

- Payment data (PCI)

- Social Security Numbers

- HIPAA-protected health information

- Controlled Unclassified Information (CUI)

*For more information about the Family Educational Rights and Privacy Act (FERPA),

please review information from the Office of the Registrar.

**If you have questions about data for a specific use case or are unsure about the

sensitivity of data, please contact afdmd.staff@ttu.edu for assistance.

OpenAI ChatGPT. (2025, September 3). Generated response to prompt: "Create an infographic based on this uploaded about appropriate data for faculty use with generative AI." Retrieved from https://chat.openai.com.

Frequently Asked Questions

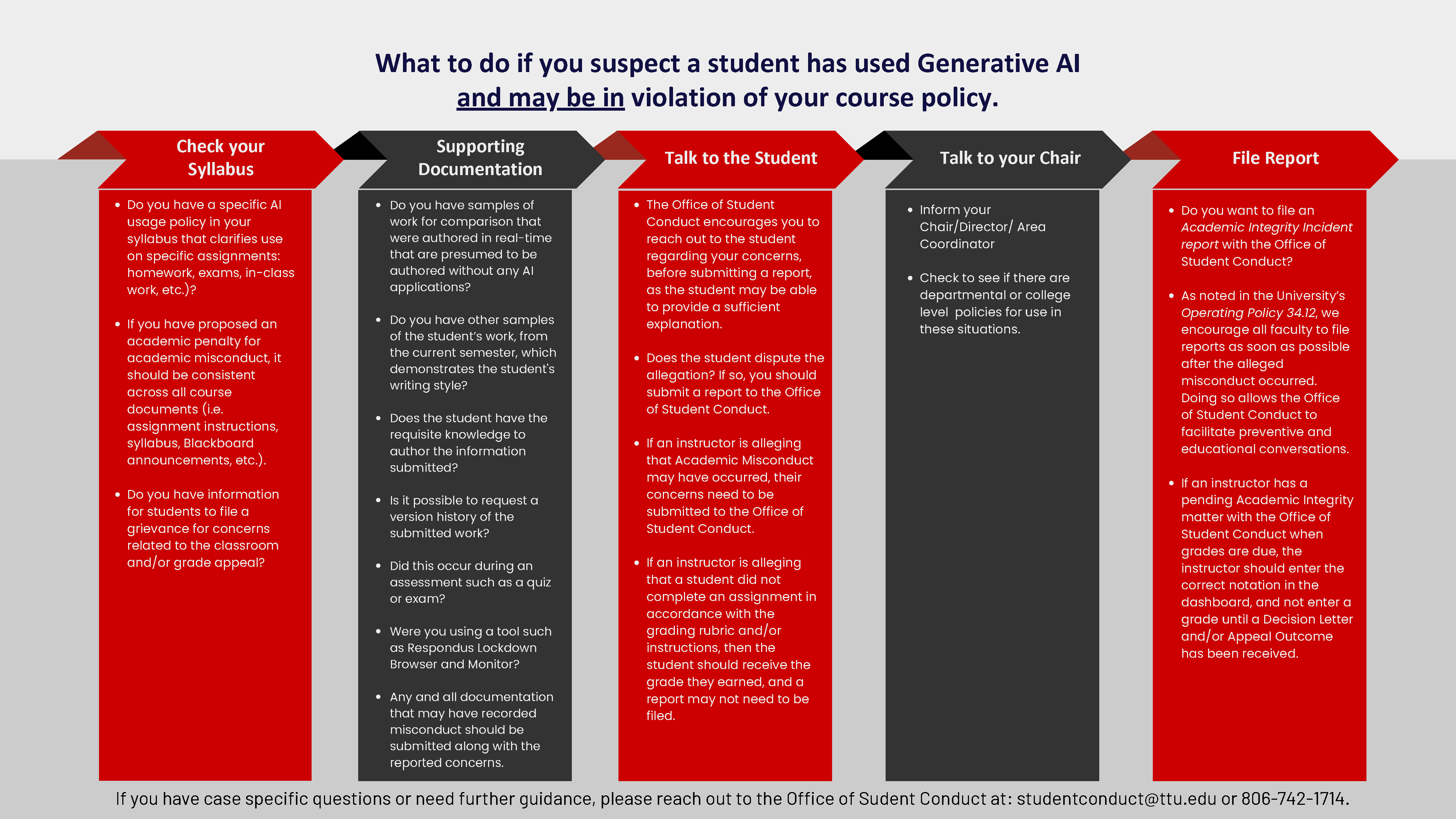

What can I do if I suspect academic misconduct with AI?

Many faculty are alarmed at potential violations of Academic Misconduct related to AI and feel concerned about what the future may hold as AI becomes more capable of mimicking human voice and decisions. What Steps Do I Take if I Suspect Academic Misconduct with AI?

How do I talk to my students about AI?

Communicate with students about AI in the syllabus, in class during the first week of the semester, and again each time a task is assigned to remind them of your expectations for how they will use or not use AI in your course. The specific nature of your policy is up to you! As you think about what AI use you will (dis)allow, it can be extremely helpful to begin with the intended learning outcomes of your course and then consider how the use of AI might enhance, limit, or shift these.

Here’s a sample policy from a course that includes several smaller writing assignments. These instructions are included in each writing assignment to remind students of the syllabus policy discussed on the first day of class:

- "As you work on this assignment, you are welcome to use ChatGPT or another AI tool to assist you with brainstorming ideas, creating an outline, and drafting your first version of the text. In line with our course policy, up to 40% of your first draft may be generated by AI, and you must indicate these portions of the text by marking them in red."

- "During the peer review stage, AI use is prohibited, because it is important for your peers to hear your authentic voice and learn from your perspective: How did what they wrote “land” with you? What questions do you have after reading it? What would you suggest to improve upon the first draft? I have provided a rubric to guide your review, and you will be asked to insert comments into the text you are reviewing."

- "When it comes to the final draft of your paper, AI use is also prohibited. At this stage, you will have everything you need to produce a polished draft, including feedback from me and two of your peers. This policy supports one of the course's main learning objectives: To foster a community of human learners who support each other in developing critical thinking skills. That is why we agree to use AI in ways that enhance our efficiency without diminishing our ability to think independently and build connections with each other."

What are simple ways to start incorporating AI into my classes?

Everywhere that we turn, it seems like we hear about artificial intelligence and predictions about its impact. Dean Inara Scott at Oregon State University suggests although a “one size fits all” approach to AI is ill-advised, one universal directive is clear: Faculty cannot ignore AI. Our students are using it now and will be using it in their future careers. Perhaps the starting point is to assess your digital mindset (Neeley and Leonardi, 2022), in other words, to reflect on your attitudes and behaviors about AI and consider how you may need to stretch to see new ways of thinking about adopting AI and potential changes to your teaching. Here’s a simple plan adapted with permission from Dean Scott’s suggestions to our context here at Texas Tech:

- Learn the basics. If you haven't already, spend time using some form of generative AI. Your discipline's tools may vary, but at a minimum, everyone can start with free versions of ChatGPT or Bing to get familiar with the technology. Does this sound overwhelming? Contact the TLPDC and ask for Lisa Low, our faculty fellow. Lisa would be glad to meet with you to have a hands-on look and conversation about your classes. There are also many articles online related to ChatGPT and generative AI in the classroom. Consider this flow chart developed by the AI Resources and Guidelines Committee or this overview and list of resources from the Oregon State University Center for Teaching and Learning.

- Choose your AI policies. At Texas Tech, instructors set the AI policies for their classes based on their teaching philosophies and learning goals. You can find three recommended syllabus statements from the AI Resources and Guidelines Committee. Consider the ways in which your students might use AI. The Committee does not recommend prohibiting AI in your course as you are likely to spend too much time policing this policy and AI is now woven into almost all forms of web searching, word processing, spreadsheet generation, and presentations software. Regardless of what policy you select, talk to your students about your “whys.”

- Consider Modifying or even Eliminating "Busy Work" Assignments. Students will be inclined to use AI to do work that they do not see the value of or don't have to engage with, which might include summarizing a reading, responding to simple discussion prompts, or the traditional 2–3-page essay assignments.

- Review more difficult assignments: Are you testing critical human skills? Students will be inclined to use AI for high stakes assessments particularly if they aren’t confident and of course, we know that this is not a new practice, but just a new form of temptation). It is the instructor’s prerogative and disciplinary expertise that allow them to know what students need to learn but in our new AI environment, it’s worth considering what information is foundational and needs to memorized and what skills are essential.

Simple Ways to Get Started with AI by Suzanne Tapp

What are some tips for using AI in online classes?

- Communicate clearly your expectations regarding AI use - i.e. When is it (not) okay to use AI in this course? - in the syllabus and in conversation with students during the first week of class.

- Help students connect course learning goals with their own sense of purpose. Why should students care about the knowledge and skills they will gain in this course, and why is it important that they participate actively in their own learning? How could AI tools support or hinder them in this process?

- Integrate multiple modes of engagement to enhance writing assignments. Some examples are asynchronous annotations of written work, peer feedback rounds, short oral presentations, the collective development of mind maps, and other opportunities for students to demonstrate their engagement with course material.

- Adjust assessments to reward the process of learning instead of just the final product.

For example, a final essay assignment could be broken down into smaller tasks that

reward effort throughout the semester and ensure a better final product:

- Week 7

Asynchronous brainstorming on a collective document to gather topic ideas, research questions, and primary and secondary works to consider. (Students earn points for contributing to the document.) - Week 8

Research pairs are established, topics are selected, and research pairs submit a project plan that includes a timeline for completion. (Students earn points for submitting a plan.) - Week 11

Research pairs submit a research poster draft and receive feedback from one other research pair via a rubric. (Students earn points for submitting a draft AND for providing feedback.) - Week 14

Research pairs submit a 5-minute video in which they present preliminary findings from their work and receive feedback from another research pair via the same rubric. (Students earn points for submitting a recording AND for providing feedback.) - Week 15

Research pairs make final adjustments to their poster and submit it, along with a copy of their research paper, for final evaluation by the instructor via the same rubric. (This is the final product, which will hopefully be higher in quality due to the scaffolded nature of the assignment, the sustained team effort, and two rounds of formative peer feedback.)

- Week 7

How can I use AI to streamline teaching tasks?

Magic School AI offers tools that can speed up the process of designing lesson plans, writing assignment instructions, creating rubrics to evaluate student work, remixing tests, and more. A free version is available.

How Do I Teach My Students to Write Helpful AI Prompts?

AI tools can help provide personalized learning for a student but only when directed to do so! How do we empower and teach our students to write effective prompt?

Remind students that prompts can be written in conversational language and can be formatted as multi-step questions. If the user wants citations, ask for them. Be aware that some AI tools have word limitations for prompts. Learn more about Teaching Students to Write Prompts.

Ideas for AI Integration

Idea #1: AI as a tutoring tool

Have students use AI as a tutoring tool to help them prepare for tests by checking their understanding of course material.

Example: Open AI’s ChatGPT4.0 is now available for free and offers enhanced capabilities in understanding and discussing images, voice, and text in over 50 languages. Students could use tools like this to practice their speaking and writing skills (in English or in other languages) in real time. AI can provide customized, constructive feedback on aspects such as pronunciation, lexical choice, register, and tone. You might consider asking students to submit a transcript of their AI tutoring session as a formative assessment.

Idea #2: Boost AI literacy and critical thinking skills

Ask students to evaluate AI-generated content and compare it to human-generated content to boost their AI literacy and critical thinking skills.

Example: Task students with identifying key ideas and main arguments in an AI-generated text and then ask them to find and cross-reference external source material to engage in lateral reading. Students could work in pairs to create an annotated list of sources that includes their thoughts on the motivation of the authors, the evidence and logic they use to build their argument, any biases they detect, as well as omissions and hallucinations they find in the AI-generated text.

Idea #3: Reframing your assignments

If using AI does not align with your learning goals, try reframing your assignments to make it more difficult to do so.

Example: Consider incorporating visual elements (images, videos, etc) into assignments or discussions. This may deter students as they are more difficult to copy into AI tools. Ask students to analyze/interpret/provide context for the visual element.

Example: Incorporate something specific from a class discussion (i.e., “Analyze and critique a key argument from one of our class discussions, using evidence from the assigned readings and your own perspective to support your analysis.”) Please note that this strategy is not foolproof, as you typically cannot “outprompt” AI.

Example: You might try integrating your readings and requiring that they integrate, through direct quotes, those readings in student’ written responses. It takes a little bit more work to get AI to do this and might be a deterrent.

Example: Timed assignments may be helpful for short written responses or quizzes. The idea is that limited time may prevent students from accessing AI.

Idea #4: Rubrics to evaluate AI generated content

Design rubrics to evaluate AI generated content if you are concerned about students’ misuse.

Example: Some folks suggest emphasizing specificity and concision on your grading rubric (as both are problematic for AI). Some faculty basically tag portions of the writing with YAST (You Already Said This) and give point deductions, as AI responses are known to be repetitive.

Example: AI is good at summarizing, but not great at making connections. Typically AI does not do a good job of weaving together specific sources to form a coherent argument or conclusion. If you use a rubric, you could have a section on synthesis, for example, "articles are connected to one another and strengths/weaknesses and similarities/differences are summarized. A paper that gets full marks will show how all articles fit together into a coherent point."

Example: Some descriptors in the rubric could include:

- Unusual or overly-complex sentence construction: AI might sometimes generate sentences that are grammatically correct but unusual or overly complex, especially when it’s trying to generate text based on a mixture of styles or genres.

- Overuse of certain phrases or vocabulary: AI models can sometimes overuse certain phrases or vocabulary that it has learned during training. This is the result of the AI trying to fill up space with relevant keywords.

- Lack of depth/analysis: AI generated writing is better at static writing tasks than creative or analytical writing.

- Format and structure: AI-generated sentences are typically shorter and lack complexity, creativity, and varied sentence structures.

Idea #5: AI tools as support

Review your course learning objectives and consider whether AI tools might support these at various stages of the learning process.

For instance, could AI be helpful as a beginning-of-process tool (i.e., at the brainstorming or drafting stage of a project?) or would it be more suitable as an end- of-process tool (i.e., at the revision or final editing stage?). Leon Furze takes a deep dive into this topic in The Myth of the AI First Draft. Your own approach will depend on the learning outcomes you want your students to achieve.

Small Bytes Blog

Background Information

Using a Human-Centered and Pedagogically Appropriate Approach (UNESCO)

Researchers and educators should prioritize human agency and responsible, pedagogically appropriate interaction between humans and AI tools when determining whether and how to use generative AI. This approach includes five key considerations:

- Educational value: The tool should address human needs and enhance learning or research more effectively than a no-tech or alternative approach.

- Intrinsic motivation: Educators’ and learners’ use of the tool should stem from internal

motivation, not external pressure.

- For example, an instructor prompts ChatGPT to generate possible expansions or case studies to add to a discussion question prompt but does not rely on ChatGPT to write all questions due to lack of time.

- Human control: The use of the tool must remain under the control of human educators, learners, or researchers.

- Contextual appropriateness: The selection and organization of AI tools and their content

should align with:

- the expected outcomes, and

- the nature of the target knowledge (e.g., factual, conceptual, procedural, or metacognitive) or the type of problem (e.g., well-structured vs. ill-structured).

- Human engagement and accountability: Usage should promote human interaction with AI,

support higher-order thinking, and ensure human accountability for:

- the accuracy of AI-generated content,

- teaching or research strategies, and

- their influence on human behavior, particularly recognizing that AI may positively influence our creativity and brainstorming or it may negatively tempt us to take short-cuts, take information at face value with minimal investigation or misrepresent content as our own.

Co-Designing the Use of Generative AI in Education and Research

The use of generative AI in education and research should not be imposed through top-down mandates or driven by commercial hype. Instead, its implementation should be co-designed by teachers, learners, and researchers. This co-design process must be supported by rigorous piloting and evaluation to assess both effectiveness and long-term impact.

To use GenAI to co-design appropriate resources and interactions, UNESCO proposes a framework for faculty that prioritizes six principles. We are asked to consider:

- Appropriate domains of knowledge or problem types (mean the purpose of the course and the learning outcomes)

- Expected outcomes from using Generative AI

- Appropriate tools and comparative advantages of Generative AI

- User requirements (skills, knowledge, ethical awareness)

- Required human pedagogical methods and example prompts

- Ethical risks involved in implementation

This co-design framework encourages pedagogically sound uses of Generative AI that upholds human agency. The following list identifies a starting place of examples that represent how Generative AI could be responsibly integrated across a wide range of educational and research domains:

- Supporting research practices

- Assisting in classroom teaching

- Coaching learners in the self-paced development of foundational skills

- Facilitating higher-order thinking

- Aiding learners with special needs

We recommend visiting this UNESCO document to view charts illustrating how co-design might work in practice as related to each of the domains above.

Texas House Bill 149: Texas Responsible Artificial Intelligence Governance Act (TRAIGA)

TRAIGA or the Texas AI Act goes into effect on January 1, 2026. TRAIGA applies to developers and “deployers” of AI systems operating in Texas, both in the public and private sectors but expressly excludes institutions of higher education and hospital districts. However, this reference is included for information and awareness purposes.

According to a June 24, 2025 article on wiley.law, “The law places obligations and restrictions on government use of AI and prohibits a person from developing or deploying AI systems for certain illegal purposes. The new law also amends existing privacy laws to address AI-specific issues. Finally, the law establishes the Texas Artificial Intelligence Council and creates a regulatory sandbox program for artificial intelligence systems.”

AI Consultations with AI Faculty Fellow

If you would like to talk about teaching with artificial intelligence and your concerns or ideas, please feel free to schedule a teaching consultation with Lisa Low, Artificial Intelligence Fellow.

Register now!

Teaching, Learning, & Professional Development Center

-

Address

University Library Building, Room 136, Mail Stop 2044, Lubbock, TX 79409-2004 -

Phone

806.742.0133 -

Email

tlpdc@ttu.edu